Machine Learning: Core Concepts, Models, and Key Algorithms

Machine Learning (ML) is a vital subfield of Artificial Intelligence (AI) and Data Science that focuses on building systems capable of automatically learning from data and improving their performance over experience without being explicitly programmed for every task. This technology has revolutionized analysis and decision-making by identifying patterns and extracting knowledge from vast amounts of data.

Foundational Concepts (Machine Learning Core Concepts)

To deeply understand Machine Learning, it is essential to be familiar with the main components that form an ML model:

1. Data Structure

- Data: A collection of information that serves as the primary source of knowledge for the model. The quality and quantity of the data directly impact the model’s success and accuracy.

- Feature (Attribute): The input variables that the machine uses for prediction. These features are the characteristics of each instance that play a role in modeling (e.g., house size, customer age). The process of selecting and modifying these features (called Feature Engineering) is a crucial and time-consuming step in model development.

- Label (Target Variable): The output or value that the model intends to predict (e.g., the house price). In supervised learning problems, these labels are present in the training data.

2. The Learning and Optimization Process

- Model: The mathematical function that the algorithm creates after training, mapping the input features to the expected output (label). This model effectively holds the knowledge extracted from the data.

- Training: The process of adjusting the model’s internal parameters using the training data so that the model can make the best possible predictions.

- Cost Function (Loss Function): A numerical metric that measures the amount of error in the model (how far the predictions are from the actual values). The algorithm’s goal during training is to minimize this value.

- Optimizer: Mathematical algorithms (such as Gradient Descent) used to update the model’s parameters in the direction of minimizing the cost function.

Core Machine Learning Paradigms (ML Approaches)

ML algorithms are categorized into three main approaches based on the type of input data and the method used for learning:

1. Supervised Learning

This approach is used when the data is labeled, and the model learns how to convert the inputs into correct outputs.

- Classification:

- Goal: To predict a discrete output or category.

- Example: Determining whether an email is “spam” or “regular,” or classifying a tumor as “malignant” or “benign.”

- Regression:

- Goal: To predict a continuous numerical value.

- Example: Predicting the exact price of a property or the fuel consumption rate of a car.

2. Unsupervised Learning

In this model, the machine deals only with unlabeled data and must independently discover hidden structures, clusters, or patterns within the data.

- Clustering:

- Goal: Grouping similar data points together.

- Application: Segmenting a company’s customers into different groups based on purchasing behavior for targeted advertising.

- Dimensionality Reduction:

- Goal: Reducing the number of input features (dimensions) without losing important information.

- Application: Simplifying models and visualizing complex high-dimensional data (e.g., using the Principal Component Analysis or PCA technique).

3. Reinforcement Learning (RL)

Learning occurs through interaction with an environment. An Agent performs an action in the environment and receives a reward or penalty. The goal is to learn the best Policy to maximize the long-term cumulative reward.

- Applications: Robotics training for physical tasks, complex game AI, and developing autonomous control systems.

Common Machine Learning Algorithms

Choosing the right algorithm has the most significant impact on the final result and depends on the problem type and data characteristics.

A) Key Supervised Learning Algorithms

- Linear and Logistic Regression:

- Linear Regression: A simple statistical model that attempts to find the best line to predict a continuous output, assuming a linear relationship.

- Logistic Regression: A classification algorithm that uses a nonlinear function to estimate the probability of binary outputs, commonly used in “yes/no” predictions.

- Decision Tree and Random Forest:

- Decision Tree: A flowchart-like structure that segments data based on a set of simple rules. These models are highly interpretable due to the transparency of their rules.

- Random Forest: A powerful Ensemble method that improves performance by combining the predictions of many independent decision trees to achieve higher accuracy and stability.

- Support Vector Machine (SVM):

- Function: Used for classification. The algorithm works by finding a hyperplane (decision boundary) that separates the classes with the maximum margin possible.

- K-Nearest Neighbors (KNN):

- Function: An instance-based algorithm. To predict a new sample, it looks at a certain number of its closest neighbors in the training data and determines the final class or value through voting or averaging.

B) Key Unsupervised Learning Algorithms

- K-Means Clustering:

- Function: Divides data into a specified number of groups. The algorithm randomly selects a certain number of points as cluster centers. Each data point is then assigned to its nearest cluster center, and these centers are updated iteratively based on the mean of the points in that cluster.

- Principal Component Analysis (PCA):

- Function: A linear dimensionality reduction technique that preserves the maximum variance (variability) of the data by transforming the original variables into a smaller set of new variables called principal components.

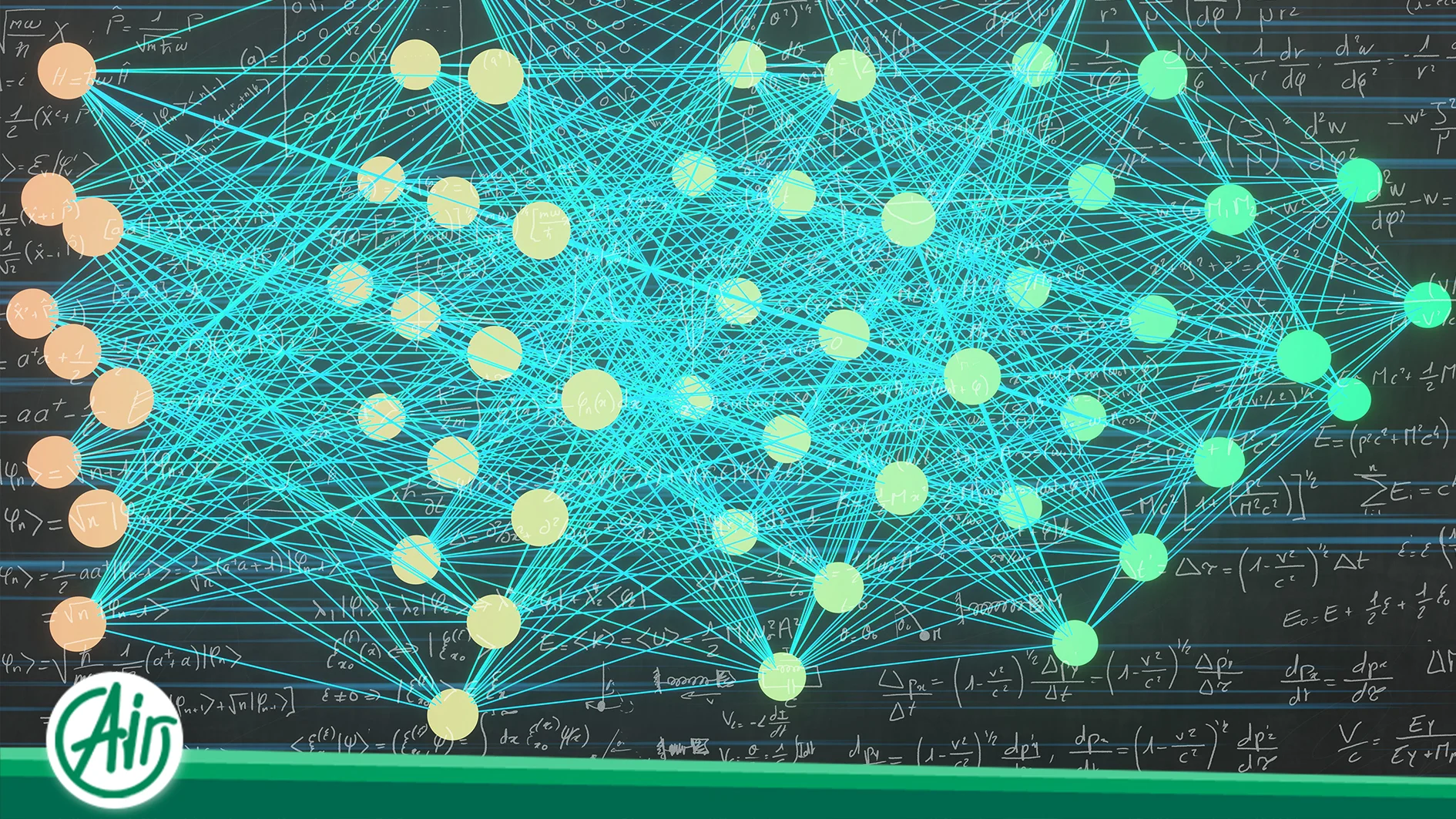

Deep Learning and Neural Networks

Deep Learning is a specialized subset of Machine Learning that uses Artificial Neural Networks with multiple hidden layers (Deep Layers) to solve complex problems. These networks can automatically extract necessary features directly from raw data (like images or text), eliminating the need for manual feature engineering.

1. Neural Networks and Their Structure

- Neural Network: A collection of nodes (neurons) organized into different layers. Each connection between nodes has a weight that is adjusted during training.

- Input Layer: The layer that receives the raw data (features).

- Hidden Layers: The intermediate layers that perform complex calculations, responsible for extracting high-level patterns and features from the data. The depth of these layers gives Deep Learning its name.

- Output Layer: The layer that provides the final result (prediction or classification).

2. Key Deep Learning Algorithms

- Convolutional Neural Networks (CNNs): Designed to work with grid-like data such as images. They use convolution layers to identify local patterns (like edges and shapes) and have revolutionized computer vision.

- Recurrent Neural Networks (RNNs): These networks have feedback loops that allow them to maintain past information, making them suitable for sequential or time-series data (like text or speech).

- Transformers: The newest and most powerful architectures relying on the Attention mechanism, which are essential for Large Language Models (LLMs) and Natural Language Processing (NLP) tasks.

Hardware and Necessary Infrastructure

Complex Machine Learning models, especially deep learning models, require massive computational power. Managing large datasets and performing heavy matrix computations necessitates specialized infrastructure.

1. The Role of Graphics Processing Units (GPUs)

- Parallel Computing: The core of Machine Learning training involves matrix multiplication and addition. Graphics Processing Units (GPUs), due to their architecture comprising thousands of small cores, can perform these computations in parallel, training models up to tens of times faster than Central Processing Units (CPUs).

- Training Acceleration: Without using GPUs, training a complex deep model could take weeks or months, but with their use, this time is reduced to hours or days.

2. The Importance of Dedicated Graphical Servers

- Dedicated Graphical Server: These servers are powerful systems specifically designed to host and utilize one or more powerful GPUs.

- High Performance: These servers have sufficient cooling and power supply capacity to sustain the continuous workload of GPUs, making them an essential solution for data science teams or large AI projects that require frequent model retraining.

- Flexibility and Control: Using a dedicated graphical server provides full control over the software environment (like the operating system and drivers) and the hardware, which is vital for optimizing model performance.

Dedicated Servers for Intensive Workloads

A dedizierter Server is a physical machine leased by a single user or organization, ensuring that all its resources—including powerful CPUs, ample RAM, and high-speed storage—are reserved exclusively for their use. For Machine Learning, a dedicated server is critical because it provides uncontended performance and the necessary stability to run resource-intensive, continuous training processes that might otherwise be interrupted on shared platforms. Furthermore, dedicated environments offer full control over the operating system and software stack, allowing data science teams to customize the configuration to precisely meet the optimization and security requirements of their specific project.

Future Trends and Novel ML Applications

The field of Machine Learning is constantly evolving, introducing new trends that push the boundaries of Artificial Intelligence.

1. Advanced Application Areas

- Generative AI: This domain includes models that can create new, realistic, and creative content, such as generating images from text, composing music, or writing high-quality articles.

- Natural Language Processing (NLP): Models that enable human-machine understanding, generation, and interaction using human language (such as chatbots and voice assistants).

- Anomaly Detection: Using ML to identify unusual patterns in data, which has critical applications in discovering financial fraud or diagnosing faults in industrial equipment.

2. Federated Learning and AI Ethics

- Federated Learning: A method that allows a shared model to be trained on multiple local and decentralized datasets. The main advantage is preserving data privacy, as the data never leaves the users’ devices.

- AI Ethics: As model power increases, attention to issues like fairness, transparency, and accountability in AI decision-making has become increasingly important to prevent Bias in algorithms.

Final Summary: The Cornerstone of Artificial Intelligence

Machine Learning (ML) is the core of modern Artificial Intelligence, enabling systems to automatically learn from data and improve their performance. This field is built on the foundational concepts of features, labels, and models, and its process always strives for optimal model fitting (optimal adjustment) between training data and real-world generalizability.

ML models primarily fall into three paradigms: Supervised Learning (for classification and regression), Unsupervised Learning (for clustering and dimensionality reduction), and Reinforcement Learning (for interaction and sequential decision-making).

The evolution of this field has led to Deep Learning, where neural networks with multiple layers, especially supported by powerful infrastructures like dedicated graphical servers (GPU), are capable of solving the most complex problems in computer vision and natural language processing. Ultimately, the success of an ML model depends not only on selecting the right algorithm but also on managing challenges like overfitting and underfitting, and the quality of feature engineering.